Learning Contact Intensive Tasks Using Multimodal Preception and Reinforcement Learning

Learning multimodal contact-rich skills using reinforcement learning

Humans effectively manipulate deformable objects by leveraging their multimodal perception for everydays tasks such opening bags or candy and finding keys in pockets. Teaching such tasks to robots is a non-trivial problem.

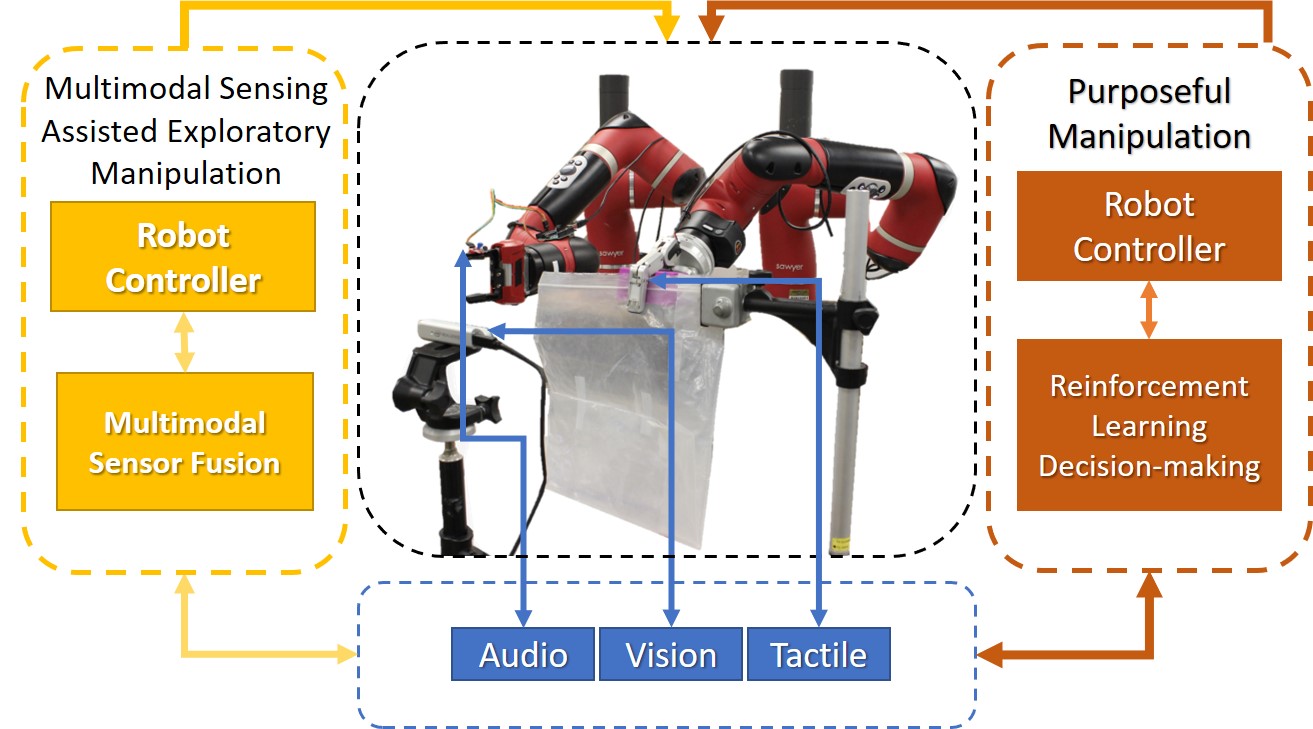

For enabling robots to learn multimodal manipulation, especillay for deformable objects, we built a human-like exploration and purposeful manipulation framework. The framework, as shown in Figure 1, allows robots to learn and adapt to a class of deformable objects, autonomously. Further, it enables robots to purposefully manipulate the objects, by levergaing the knowledge base created during the exploration stage, to complete a task. Please refer our paper for more details.

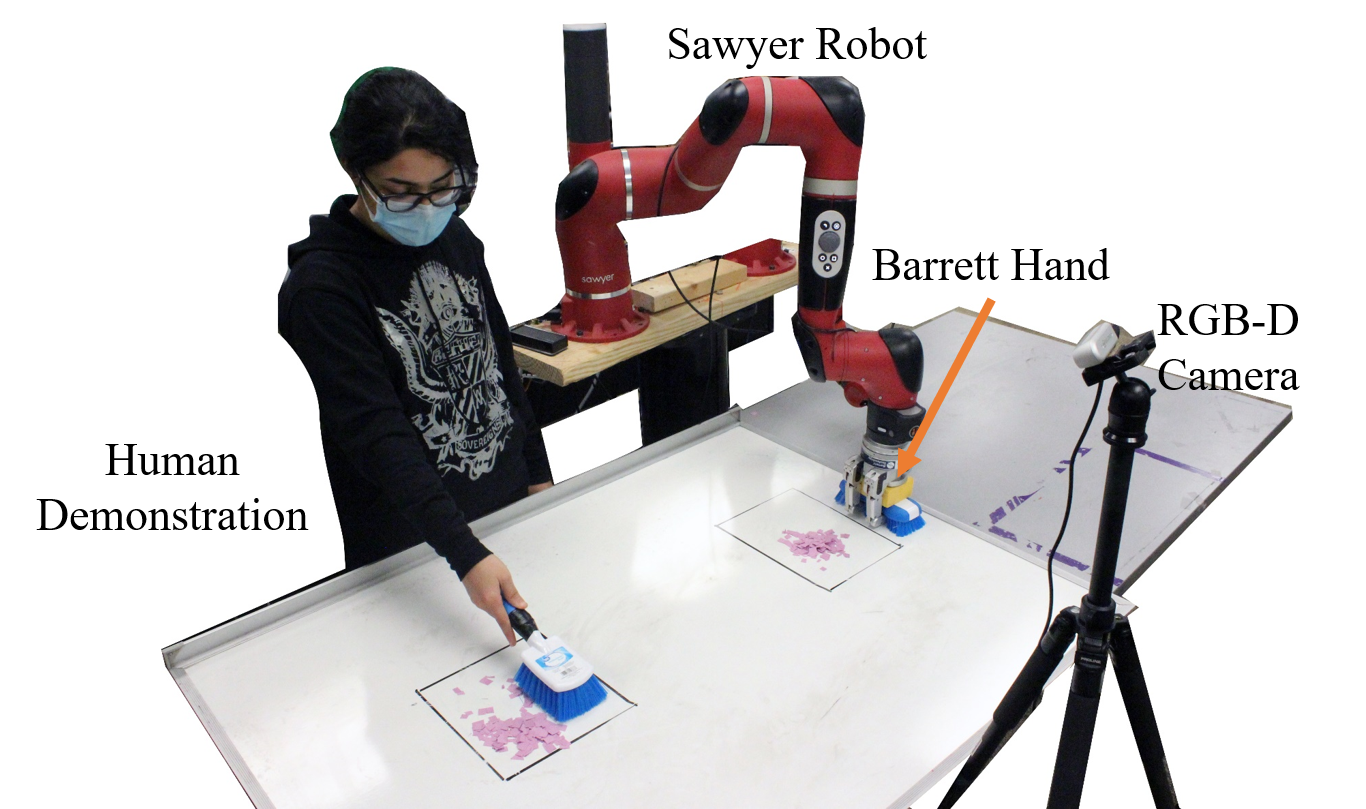

Teaching robots contact-intesive tasks, such as peeling, scooping, and writing, from just demonstrations is another challenge i contributed to. In this work, using a reinforcement learning-based methodology without explicit reward engineering, we enable the robot to learn such contact intensive tasks. The robot system uses the modalities of touch (force and tactile feedback) and vision (videos of demonstrations). The experimental setup and the results are shown in Figure 2. The full paper is available here.